Swarm from OpenAI - Routines, Handoffs, and Agents explained (with code)

Open AI has just come up with a Python framework called Swarm. They’ve clearly mentioned that it’s an experimental and educational framework that is lightweight. It's intended to be a multi-agent orchestration framework. They have also mentioned that it’s experimental and it’s not intended to be used in production. Therefore it has no official support which means we cannot send any PRs as they won’t be reviewed and merged.

Why Swarm?

The primary goal of Swarm is to showcase the handoff and routines pattern explored and explained in the orchestrating AI agents handoff and routines cookbook. The cookbook introduces the notion of routines and handoffs. It clearly walks us through the implementation and shows how multiple agents can be defined and orchestrated in a simple, powerful, and controllable way. Swarm is the sample repository they have created to demonstrate the idea of routines and handoffs!

So, I believe Swarm is not going to be a kind of a production-level framework that can be used by many others, at least in the near future. At this point in time, it just demonstrates the idea of routines and it’s up to us how we make use of the routines. Understanding routines could be more powerful compared to getting ourselves very comfortable with the Swarm framework. So, let’s dive into routines.

Routines

The notion of routines is not strictly defined. Instead, it is meant to capture the idea of a set of steps. A routine is just a list of instructions in natural language and it is provided with the system prompt. For example, let’s have a look at the system prompt below:

The routine mainly follows two main protocols:

- It provides a list of steps, preferably numbered one after the other

- The decision points are clearly demarcated with “if” and “ONLY if”

Let’s understand the system message in a simple scenario where the model interacts with the user through a chat app. The model first needs to ask questions and understand the user’s problem deeply. With that understanding, it needs to propose a fix and ONLY if the user is not satisfied, it needs to offer a refund. If accepted it needs to search for the ID and then execute the refund.

To implement this, the routine needs two functions: one to search for the ID from the database (look_up_item) and one to execute the refund called execute_refund (possibly by invoking a payment method or interacting with a Bank through their API). So, we need to write two functions.

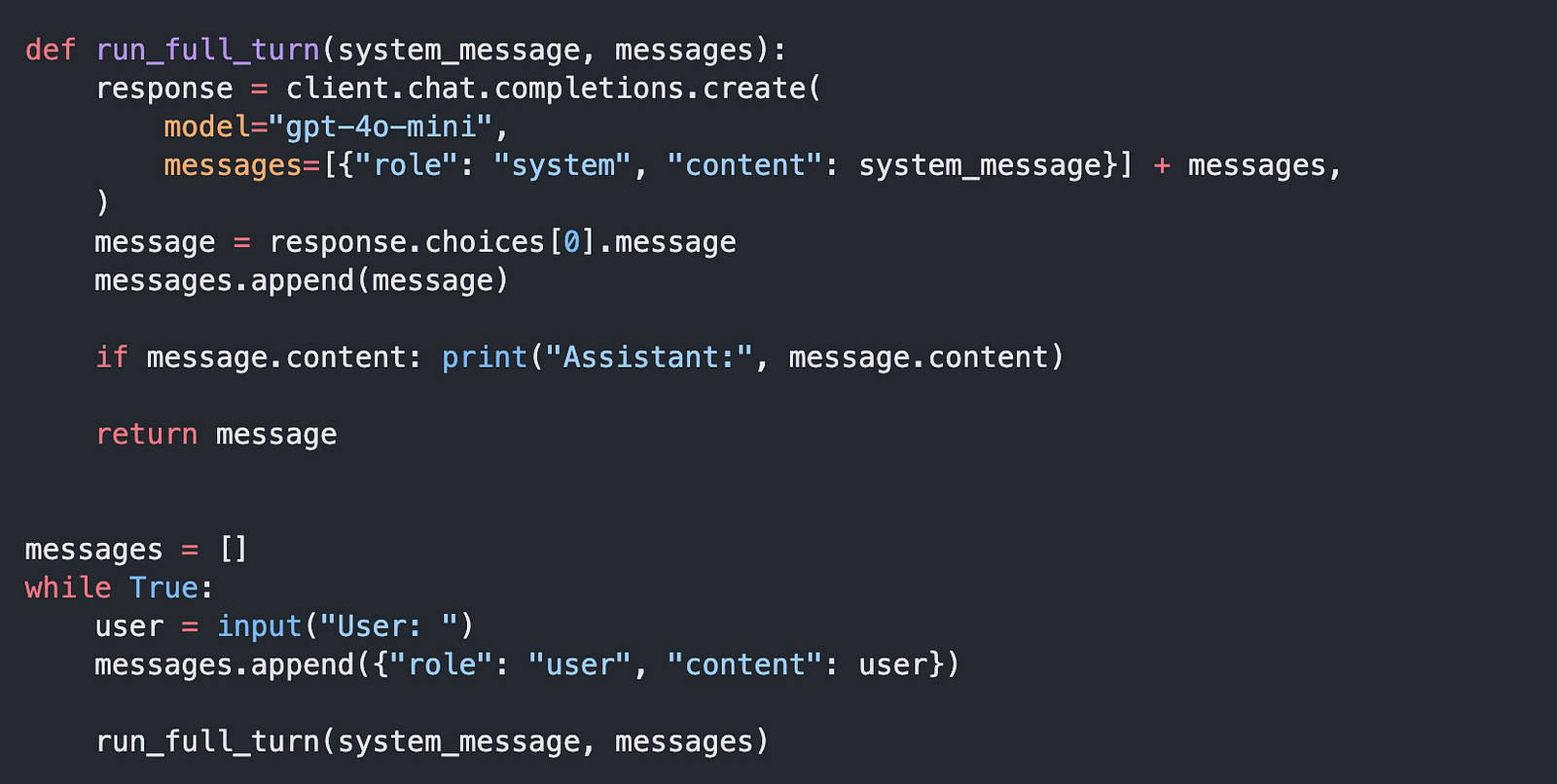

The above steps need to run in a loop so that the user interacts with the bot as many times as they like and we append the responses to messages before feeding them to the LLM. This is very basic as to how we develop a chat app using the Open AI’s chat completions as shown below.

However, this does not yet accept function calls. It can be equipped to accept functions as tools. But we need to our convert our functions into Json schemas because the open AI prompts only takes functions as Json schemas. so we convert the functions (tools) in to a Json schema.

We can now equip the chat completions with our functions by passing them as a parameter as shown below:

Practically speaking, the agent might have to invoke these tool calls more than once. For example, if the user has a query about two products. The agent will get all the details from the user. It might look up the first item, and execute a refund. For the second item, it might look up the item and may decide not to execute the refund. To achieve this, it has to go through this tools invocation loop at least twice. So to handle situations like this example, we put the tool invocation in a loop and we check whether there are any more tool calls left. If there are no more tool calls then we’ll break out of this Loop and return the message to the user.

Otherwise, we execute each of the tool calls one after the other till we are exhausted as shown in the pseudocode above.

This way of handling the tools in a routine is fine but what happens if there are many more tools to be handled by the same routine? Rather than complicate things, we can introduce Agents. 😃

Agents

If we group the tools into separate, specialist functions along with a specialist model in order to execute a specialist role we build an agent.

For example, the sales agent is equipped to look up for item(look_up_item) in the database. Similarly the refund agent it’s able to handle any refunds (execute_refund) that the user wants.

We can define agents as classes that inherit from the Agent class. It’s got to have a name, a model, and specific instructions as to how it needs to behave as shown in the figure above. It is also equipped with different tools that are going to help it execute its duties and responsibilities.

Handoffs

We have grouped tools and separated them responsibly by empowering the agents to use their tools. But what about communication between them?! This is where handoffs come in.

Handing off the conversation to another agent is similar to how you transfer to someone else in your team who is a specialist in a particular domain whenever you’re dealing with a user query.

To ease the communication between agents, agents can have a “transfer” task and this task can return the next agent that is going to take up the responsibility of responding.

With our existing setup, this can be achieved if a tool can simply return the next agent who is going to take the responsibility. In the below example, we can see that the sales agent has the transfer_to_refunds tool to handoff to the refund agent.

These tools (which are functions) to transfer control from one agent to another are referred to as handoff functions.

That's pretty much the gist of the story of routines, handoffs, and agents in Swarm. If you get it, you are good to use Swarm! 😃

Visual Explanation

If you would like a detailed but visual exploration, please check out the YouTube video that expands on the ideas we just saw:

See you in my next. Take care...