Donut - the first OCR free PDF parser using transformers

In my previous articles, we saw about rule-based PDF parsing and pipeline-based PDF parsing for building RAG systems. Next comes learning-based PDF parsing methods which employ some form of machine learning to bring intelligence into the parsing step.

More specifically, let's look into Donut which treats PDFs as images and does document understanding rather than just parse them.

Limitations of pipeline-based methods

One of the major limitations of pipeline-based methods is that they employ an OCR system and so their accuracy is limited only to that of the OCR system. With deep learning touching upon every single area of software, we can put to use some deep learning models to achieve better PDF parsing. More specifically, we steer towards transformer-based models. One such model is the Donut. So let's dive deep into Donut in this article.

Donut stands for DOcumeNt Understanding Transformer. Instead of relying on off-the-shelf Optical Character Recognition engines, DONUT uniquely leverages transformers. Along the way, it overcomes some of the drawbacks of OCR systems which are,

- High computational cost for OCR

- Inflexibility of OCR systems with different languages

- Parsing accuracy is limited to OCR employed in the parsing pipeline

Why learn Donut?

Here are some highlights that motivate us to learn about Donut:

- Donut is the first OCR free transformers based model

- Donut is for Visual Document Understanding where pipeline-based models fail. Examples include taking a picture of a receipt and trying to parse it to store it straight into a database. Tickets, business cards, and receipts are all good candidates.

- Donut is more of an AI parser, meaning it understands the document rather than simply trying and parse

Simple but cool Architecture

With that motivation, let's dive straight into the Donut architecture. Below are the main building blocks of the architecture:

- First, the photo of the document is passed through an encoder. Though any transformer-based architecture will do the trick, they have used Swin Transformer. If you wish to understand the Swin Transformer, please check out my video to go into the details.

- The output of the last layer of the encoder is fed into a decoder. Donut uses BART as the decoder, though there are a few options. The BART model also takes in prompts as input thereby enabling us to control the generation using prompts. In the figure above, we can see, “What is the price of choco mochi?” as the prompt to the decoder.

- Lastly, the generated outputs are converted to structured JSON format which is much more convenient for further usage.

Teacher Forcing Training

For training the setup they use something called the teacher-forcing strategy. In this strategy, the model is trained with the loss between the input and generated output token. This is unlike usual where use the previous input token. The reason for this choice is that we are using the model more like an OCR system and we do not expect any sequence dependency in this task Numbers in receipts or tickets do not have any dependency on numbers before them!

However, during inference, we use the previous token as shown in the below figure.

Fine-tuning

The generated output must be in JSON format. So the model is fine-tuned for this. Fine-tuning involved training to generate particular tokens to include formatting. For example, after fine-tuning the model generates [START_class][memo][END_class] which can readily be converted into a JSON with some basic regular expressions.

Hands-On

Now that we have seen how Donut works, it's time to get our hands dirty with a quick colab notebook.

The notebook is available here.

Step 1 — Install the required

Though Donut is open source and available through its own repo, we will use the transformers library from HuggingFace here. We also need the sentencepiece as there is a dependency on it. And of course, there is PyTorch. As the model does visual understanding of the document, we need to convert the PDF into an image so pdf2image library is also needed.

!pip install transformers

# !pip install torch

!pip install Pillow

!pip install sentencepiece

!pip install datasets

!pip install pdf2image

Step 2 — Import packages

We import the needed packages next. The most important classes here are DonutProcessor and VisionEncoderDecoderModel. As the name suggests, the DonutProcessor is the specific processor that implements the Donut model logic.

import torch

import re

from transformers import DonutProcessor, VisionEncoderDecoderModel

from PIL import Image

from pdf2image import convert_from_path

Step 3 — Model and processor

With the imported classes, let's create the processor and model objects we will be using. We will be using the fine-tuned model as we need JSON as our output.

processor = DonutProcessor.from_pretrained("naver-clova-ix/donut-base-finetuned-cord-v2")

model = VisionEncoderDecoderModel.from_pretrained("naver-clova-ix/donut-base-finetuned-cord-v2")

device = "cuda" if torch.cuda.is_available() else "cpu"

model.to(device)

Step 4 — Read the input as an image

The whole advantage of Donut is its ability to parse photos. So let's read the image and extract the pixel values as a tensor. We need to make sure we normalize the pixel values to be between 0 and 1.

# load document image

image_lst = convert_from_path("/content/invoice_sample.pdf")

image = image_lst[0]

ask_prompt = "<s_cord-v2>"

decoder_input_ids = processor.tokenizer(task_prompt, add_special_tokens=False, return_tensors="pt").input_ids

pixel_values = processor(image, return_tensors="pt").pixel_values

# normalize pixel values before feeding the model

pixel_values -= pixel_values.min()

pixel_values /= pixel_values.max()

pixel_values

Step 5 — Process and generate

Let's feed the pixel values to the processor and ask the model to generate our output. Lastly, let's visualize what the model has done.

outputs = model.generate(

pixel_values.to(device),

decoder_input_ids=decoder_input_ids.to(device),

max_length=model.decoder.config.max_position_embeddings,

pad_token_id=processor.tokenizer.pad_token_id,

eos_token_id=processor.tokenizer.eos_token_id,

use_cache=True,

bad_words_ids=[[processor.tokenizer.unk_token_id]],

return_dict_in_generate=True,

)

sequence = processor.batch_decode(outputs.sequences)[0]

sequence = sequence.replace(processor.tokenizer.eos_token, "").replace(processor.tokenizer.pad_token, "")

sequence = re.sub(r"<.*?>", "", sequence, count=1).strip() # remove first task start token

# visualize the result

result = processor.token2json(sequence)

print(result)

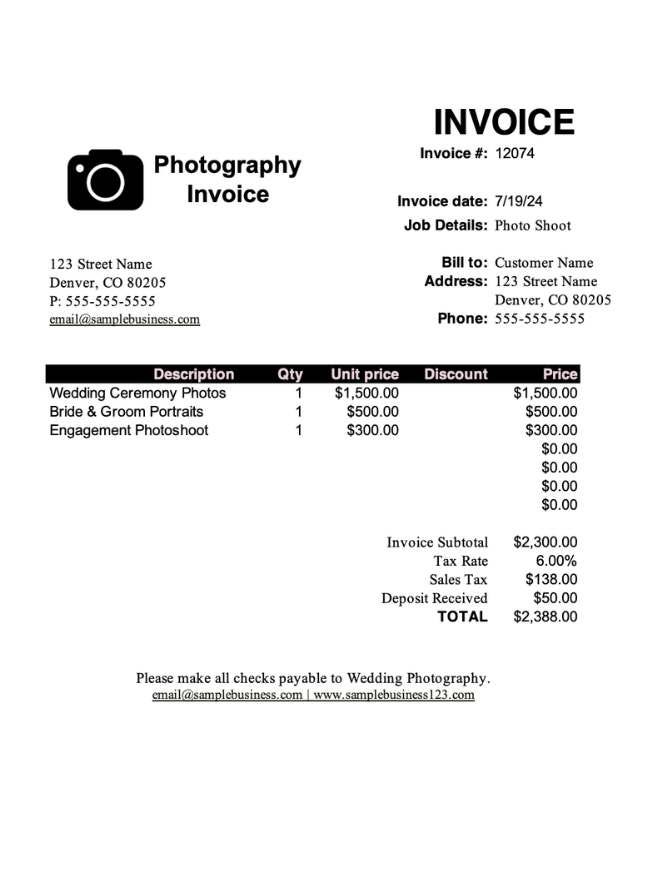

And here we go, the output generated for the input image which is a simple invoice taken from Google images as shown in the below figure.

{'menu': [{'nm': 'Photography', 'discountprice': '12074', 'price': 'INVOICE'}, {'nm': 'Invoice date: 7/19/24', 'num': 'Job Details: Photo Shoot', 'price': 'Shoot'}, {'nm': 'Street Name', 'num': '123', 'price': 'Name'}, {'nm': 'Denver, CO 8020S', 'num': 'Address: 123', 'price': 'Street Name'}, {'nm': {'price': 'Denver, CO 80205'}, 'num': 'Phone: 555-555-5555', 'price': {'discountprice': {'cnt': '1'}}}, {'nm': 'Wedding Ceremony Photos', 'unitprice': '$1,500.00', 'cnt': '1', 'price': '$1,500.00'}, {'nm': 'Bride & Groom Portraits', 'unitprice': '$500.00', 'cnt': '1', 'price': '$500.00'}, {'nm': 'Engagement Photoshop', 'unitprice': '$300.00', 'cnt': '1', 'price': '$300.00'}, {'cnt': '1', 'price': '$0.00'}, {'cnt': '1', 'price': '$0.00'}], 'sub_total': {'subtotal_price': '$2,300.00', 'tax_price': '6.00%'}, 'total': {'total_price': '$138.00', 'creditcardprice': '$50.00'}}

Conclusion

What we have just seen is an example of document parsing as we are focussing on PDF parsing here. But as the model is a sophisticated transformer model it has the potential to do document Q&A and also document classification. I leave that as an exercise as it only needs a simple tweak to the above code!